High Energy Physics Libraries

Webzine

High Energy Physics Libraries

Webzine

High Energy Physics Libraries

Webzine High Energy Physics Libraries

Webzine |

|

HEP Libraries Webzine

Issue 13 / October 2006

Introduction

Open Access is one of the most popular recent terms. The definition of an open access publication has been largely agreed upon: the author or copyright holder grants to all users a free, irrevocable, world-wide, perpetual right of access to, and a licence to copy, use, distribute, perform and display the work publicly and to make and distribute derivative works in any digital medium for any reasonable purpose, subject to proper attribution of authorship, as well as the right to make small numbers of printed copies for their personal use. A complete version of the work and all supplemental materials, including a copy of the permission as stated above, in a suitable standard electronic format is deposited in at least one online repository that is supported by an academic institution, scholarly society, government agency, or other well-established organisation that seeks to enable open access, unrestricted distribution, interoperability, and long-term archiving [1].

Widely and controversially discussed by experts [2] [3] is the question: what would be the most successful way to bring scientific authors to provide open access to their publications?

In order to accomplish the open access idea within universities and research institutions, an important factor is to enhance the visibility and usage of Institutional Repositories – IRs (the so called green road to open access) and Open Access Journals (golden road to open access). Usage is employed in the passive sense of reading as well as in the active sense of citing a publication.

DINI (the German Initiative for Networked Information) and DFG (German Research Foundation) - with support from OSI, SPARC Europe, SURF and JISC - organised a workshop to discuss enhanced and alternative metrics of publication impact given the fact that an increasing number of scientific publications are available through open access in Institutional Repositories [4]. The idea for such a workshop originated during an informal meeting during the CERN workshop on Innovations in Scholarly Communication (OAI4) in Geneva [5] where representatives of the organisations mentioned before came together. It was decided to focus on three different aspects

- Alternative metrics of impact based on usage data (the LANL approach) [6]

- Interoperable and standardised usage statistics (Interoperable Repository Statistics and COUNTER) [7]

- Open Access citation information [8]

Forty-two experts from eight countries accepted the invitation to this workshop. It was understood that the aim of this workshop was a pragmatic one. It should not serve as a forum for the development or redefinition of the concept of impact or scientific visibility. Starting from the well-known limitations of the current journal impact factors [9] a number of existing approaches were discussed which consider a wider basis of data and other algorithms to process these data in order to produce quantitative metrics of scientific visibility. Metrics were understood as the processing of (eventually aggregated) raw data – impact or status are derived from rankings based on those metrics. The collection of raw data, aggregation and processing should be transparent in order to ensure the acceptance of possible rankings.

The selection of approaches presented in the workshop did not claim completeness but was regarded as promising for the future development of institutional repositories and therefore of open access to the scientific literature. Consequently there was an introductory session where Lars Bjørnshauge (Lund University Library) and Norbert Lossau (Bielefeld University Library) gave their view of the next generation of institutional repositories as a network within other networked services.

Lars Bjørnshauge gave a concise overview of the past and present situation of institutional repositories (IR) before he sketched his views for the future. However new the topic, there is already a kind of history of IRs. They began as an additional channel for dissemination of publications and rose to the attention of a greater audience with the debates about open access to scientific publications. In his opinion it is important to keep in mind the different purposes for which IRs are run in a University or research institution. Besides the dissemination of publications already mentioned IRs are often meant to record the scientific published output of an institution. They can (and increasingly will) play a role in research assessment [10] and, last but not least, serve as input for scientist’s CVs.

Lars defined a number of key issues on which IR development has got to focus on its way from experiment to service. He pointed out that because the development of institutional repositories has been very diverse, there is a low degree of uniformity in standards, definitions, formats and protocols. This is the reason why it is difficult to develop secondary services based on institutional repositories at the moment.

Those key issues or critical success factors for IRs are:

- the adoption of standards,

- the modelling and deployment of workflows,

- the long term availability of the electronic documents contained in an IR,

- the question of impact and visibility of electronic documents in IRs and

- filling the IRs with content.

Norbert Lossau (Bielefeld University Library) presented an overview of the European project DRIVER which will most probably run from June 2006 till August 2008. It intends to build a European IR infrastructure. As a supporting action a series of strategic studies will be performed that intend to bring more detailed information and analysis about the IR situation in Europe than the CNI-JISC-SURF Amsterdam 2005 report [11] could which was the very first of its kind.

DRIVER acknowledges that national programmes for IR development and standardisation should be set up. DRIVER is intended to serve as a demonstrator and test bed for transnational access to existing IRs. Its main aims are personalised access to virtual collections, quality of services, interoperability, extensibility and integration of local services. DRIVER should also serve as a basis for other services which are built on top.

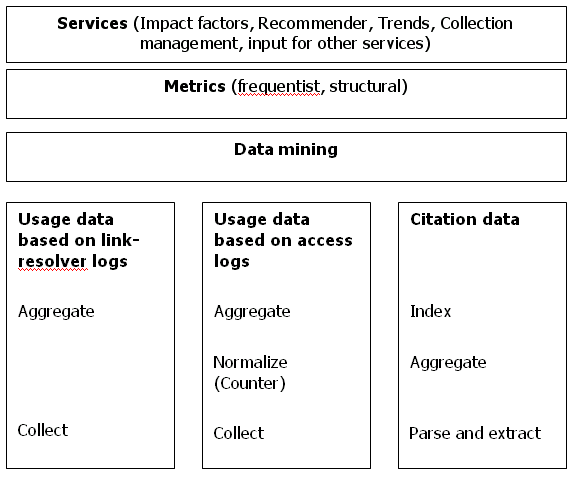

Johan Bollen (Los Alamos National Laboratory) presented the work done at LANL and California State University with respect to alternative metrics of journal impact based on usage data. Usage data (the more precise term is access data as usage cannot be defined precisely) is collected through link resolvers. In order to do that it is essential to create a digital library infrastructure that enables linking servers to record the biggest part of usage. IRs should be able to become part of that infrastructure i.e. they should at least be OpenURL enabled. Linking server logs are then serialised as OpenURL ContextObjects [12] and exposed by an OAI-PMH data provider. The repository retains full control of what is exposed and how, e.g. anonymization of user IDs.

A trusted third-party (or federation thereof) can harvest and aggregate logs from a range of repositories. Next the aggregated logs are subjected to data mining techniques which derive item networks from access sequences recorded in the logs under the assumption that similar items are accessed by similar users. These networks can, for example, be used to construct recommender services useful to both local institutions as well as third-party aggregators.

As a last step, different metrics can be applied to the aggregated data. In addition to the well-known and popular frequentist metrics (e.g. used to calculate the journal impact factor) there is also the possibility to derive structural metrics of quality from the generated item networks leading to more complete, fine-grained and reliable evaluation of scholarly communication. Furthermore, log data is free from publication delays and can be used to track contemporary trends in science immediately. At California State University nine major institutions participated in a field experiment from November 2003 to August 2005. Collected journal usage data was processed with a weighted PageRank algorithm resulting in a different list of journal ranking compared to ISI impact factors [13].

Tim Brody (University of Southampton) gave an overview of the project Interoperable Repository Statistics (IRS) which plans to investigate the requirements for UK and international stakeholders, design an API for gathering download data, build distribution and collection software for IRs, and develop generic analysis and reporting tools. There has been a report on stakeholder requirements derived from interviews with domain experts [14]. The aim is not to have theoretically exact usage data, but comparable usage data. However, there is no draft or RFC (Request for comments) document at the moment which summarises IR requirements for comparable usage data.

Workshop participants strongly felt the need for supporting actions in this field incorporating COUNTER and Project IRS activities in order to define criteria and working definitions that can be tested on a select number of IRs.

Sebastian Mundt (Hochschule der Medien Stuttgart) gave an update on COUNTER activities. Currently there are two so-called Codes of Practice in operation: one for Journals and Databases (Release 2) [15], and one for Books and Reference Works (Release 1) [16]. Usage data is processed at the source (publisher site or IR) and accuracy of the usage reports is tested by an external auditor within a certain tolerance (-8% to +2%). It became obvious that open access sites like IRs have to cope with additional problems like automated web-crawler access in normalising their usage data. The question of how to exclude spider and robot access, a problem especially occurring in Open Access Repositories, was raised and regarded as an important field of action for the future. A specific code of practice for IRs does not have the highest priority within COUNTER at the moment. Workshop organisers and participants will actively engage in closer co-operation with COUNTER and IRS to ensure that these important issues will be solved and promoted (e.g. in the DINI Certificate Document and Publication Repositories) [17].

Jeff Clovis (Thomson Scientific) gave an overview of the Web Citation Index (WCI). This new service started in November 2005 to cover material available in IRs. WCI is a commercial product which will become fully available later in 2006. Currently 38 IRs are indexed. Thomson Scientific content editors have already selected an additional 500 for inclusion. OpenDOAR [18] and other IR listings have been taken as a starting point. The published version of an article is indexed in Web of Science, the preprint or postprint version accessible in an IR is indexed in WCI – with links between the two services.

Workshop participants agreed during the discussion that there is a need to standardise citation information within IRs, so they can become a solid basis for commercial services like WCI as well as open public services.

Ralf Schimmer (Max Planck Society) gave an update of open access activities within the Max Planck Society and of the co-operation with Thomson for WCI. The MPG eDoc-Server (MPG’s institutional repository) is not indexed in WCI yet but this is planned for April 2006. eDoc contains about 10,000 documents at the moment of which about 3,400 are open access. President, Vice-President and Nobel Laureates of the MPG shall be persuaded to deliver open access versions of their published work to create a “me too” effect within the MPG comparable to the “cream of science” effort in the Netherlands. Tests with the WCI showed that there are still issues concerning the linking between different versions of a document (preprint, postprint in IR, postprint on publisher site).

Tim Brody (University of Southampton) confirmed the versioning problem when he reported on the Open Access Citation Index Services. However, extraction and parsing of reference data in IRs could serve at least two functions:

On the one hand it would allow the controlling of references during the upload of new documents. This would enable authors to correct citations, especially if reference data could be checked against existing services like CrossRef, Web of Knowledge, Google Scholar etc. The catchword for such a service could be “Click & Canonical”. Reference parsers are already deployed in IRs for rather homogeneous research communities like High Energy Physics (e.g. in CDSWare) [19]. These solutions can not easily be applied to a broader spectrum of disciplines or a general solution for reference parsing.

On the other hand references in IRs could be displayed and exported as metadata. This also needs well-structured references and a standardised form of exchange. This exchange could be well realised as an extension of the OAI-PMH using XML ContextObjects [20].

For both scenarios the situation can be improved, however, by supplying author tools (like RefWorks [21], Endnote, the BibTeX format) to support the structure of scientific writing and citing and thus increase the quality of input data.

Discussions in the breakout sessions on the second day of the workshop led to the conclusion that all three aspects covered by the workshop are relevant for the visibility of scientific publications.

OpenURL techniques (including metadata-based identification of documents if persistant identifiers are not available) were regarded as promising and the possibility of discriminating documents by subjects was demanded. Standardised link server logs, which could even be derived from apache web server logs (although this is a rather noisy approach), would provide the basic data. Participants agreed that linking servers are a crucial factor in DL concepts. This means creating a digital library infrastructure that enables linking servers to record the biggest part of usage. Therefore all digital library services (catalogue, online databases, document delivery, repositories, etc.) have to be involved. Deployment of linking servers in Germany is incomplete in two ways: quite a number of library systems do not use linking servers at all, and some of those that do use them do not connect all services exhaustively.

Linking server logs can be serialised as XML-ized OpenURL ContextObjects and exposed by an OAI-PMH repository. The repository retains full control of what is exposed and how, e.g. anonymization of user IDs. A trusted third-party (or federation thereof) can harvest and aggregate logs from a range of repositories. The aggregation of usage data should be performed on different levels: local, global, and community based. Data mining and analysis must become end-user services. It was agreed that there should be demonstrators for data mining techniques and results.

This breakout group discussed what is needed from an infrastructural perspective of IRs to achieve global interoperable usage statistics and which services can be built on standardised usage statistics.

Participants agreed upon the fact that there is still a lack of standards. Even in projects like COUNTER or IRS there are currently not yet adequate standards that could easily be adapted to IRs. Furthermore, the number of IRs complying to standards (like the DINI certificate) is too small.

The group described a particular problem concerning the document space covered by the current services: the services do not include enough or the proper documents from most subject specific perspectives. Because there are different views or subject perspectives on the information, different needs appear for investigating those document spaces. The participants agreed that there is a necessity to have 1) linking statistics, 2) citation statistics and 3) usage statistics, and even to use a combination of these different metrics. This would allow different kinds of analyses of the documents e.g. when the documents are used for teaching or by specific research communities. There is no urgent need, for example, for a lecture book to be cited (in other words to gain prestige), but rather that students read it (which means it gains popularity). In this case therefore, it would be more appropriate to collect access rather than citation statistics [22].

The group pointed out that there is a need for agreed definitions and that the purpose of statistics for different stakeholders should be defined within a scope statement of the service offered.

Further research on the meaning of statistics was considered necessary, especially regarding the mathematical and technical background, the interpretation (e.g. correlation with other factors), relational statistics, and context based statistics.

In order to put these ideas into practice, it was suggested that an existing proposal (e.g. COUNTER) could be checked against the criteria and against a good selection of repositories to identify areas needing development.

This group’s answer to the question of what is needed from an infrastructural perspective of IRs to support citation indexing and analysis, was “interoperability”. It was seen necessary to integrate open access and commercial citation data, that is to be able to receive the citation data of all resources, not only of a few.

IRs should ideally provide a possibility to import the references from articles, and an online tool to add references to an article. The participants agreed that only added-value can really convince users to deposit their documents in IRs. Such added-value could be that the IR automatically checks for correct references and standardises them (auto-finds DOIs, etc.).

The group discussed how reference parsing tools can be integrated. An online service which parses for references and which allows a parallel upload of BibTeX or endnote references was suggested. In order to integrate reference-resolving services, existing services, like CrossRef, Web of Science, or Google Scholar, should be approached.

The group also discussed how storage and communication of reference data can be standardised. Standardisation of a Reference Exchange Protocol, for example realised as an extension of the OAI-PMH using XML ContextObjects, seemed to be reasonable and possible.

Breakout sessions reported their findings to the final plenary session. The need to fill the repositories in order to reach a critical mass was strongly highlighted throughout the discussion. It was agreed that all three topics covered within the workshop pose different advantages and disadvantages and need different lines of action.

Usage data collection based on link resolver systems has been successfully performed in a huge field trial at CalState and LANL. It has to be tested under different basic conditions in Europe. The focus lies on the development of a suitable digital library infrastructure(s) and on the question which organisation(s) should aggregate and process the data.

In the field of usage data based on access logs, standardisation and collaborative efforts have to be intensified in order to come to comparable usage analyses for IRs and publisher sites. Workshop participants strongly felt the need for supporting actions in this field incorporating COUNTER and Project IRS activities in order to define criteria and working definitions that can be tested on a select number of IRs. Workshop organisers and participants will actively engage in closer co-operation with COUNTER and IRS to ensure that these important issues be solved and promoted (e.g. in the DINI Certificate Document and Publication Repositories) [23].

Workshop participants agreed that there is a need to standardise citation information within the IRs, so they can become a solid basis for commercial services like WCI as well as open public services. Efforts in citation analysis have to be focused on author tools, reference resolving and exchange. Results have to be complementary to already existing solutions and players in the field (like WCI, Google Scholar, etc.). It is important to keep in mind, however, that there are a variety of options to calculate and rank the importance and visibility of scientific publications (Fig. 1). Collecting data, aggregating, and processing it have become more separated than they were in past times. One striking example is in the way different metrics are now applied to ISI citation data. Johan Bollen and his group compared the "classic" impact factor with a weighted PageRank algorithm and proposed a combination of both (the Y-factor) [24].

In order to follow all aspects relevant to the visibility of scientific publications, workshop participants agreed to form three working groups which would take additional experts on-board. Discussions have to be continued at an international level, especially with the European Science Foundation [25] and the Knowledge Exchange Office [26]. Based on the results of the workshop these working groups will formulate requirements and implementation details in all three fields more thoroughly. Thus they will guarantee that this successful workshop will have sustainable consequences.

|